When thinking about the future, things are often expected to run smoothly and it is assumed that people will act relatively rationally or in the same way as oneself would act in an ideal situation. The reality, however, is often a bit more odd and less distinct. People use new technologies and products in ways that the engineers did not consider when developing them. In addition, technology may have surprising and undesired consequences, especially when they become widely adopted. In order to prepare for more surprising uses and, if necessary, to prevent them, it is useful to examine things through misuse and side-effects.

Unintended consequences of digitisation

Digitisation and artificial intelligence promise to significantly change our society and the ways we do things. Smart contracts and a seamless flow of money will change our ways of doing business, image recognition algorithms will detect cancer and arrange our picture library while doing so, and AI assistants will organise our daily chores. However, these developments also have a dark side.

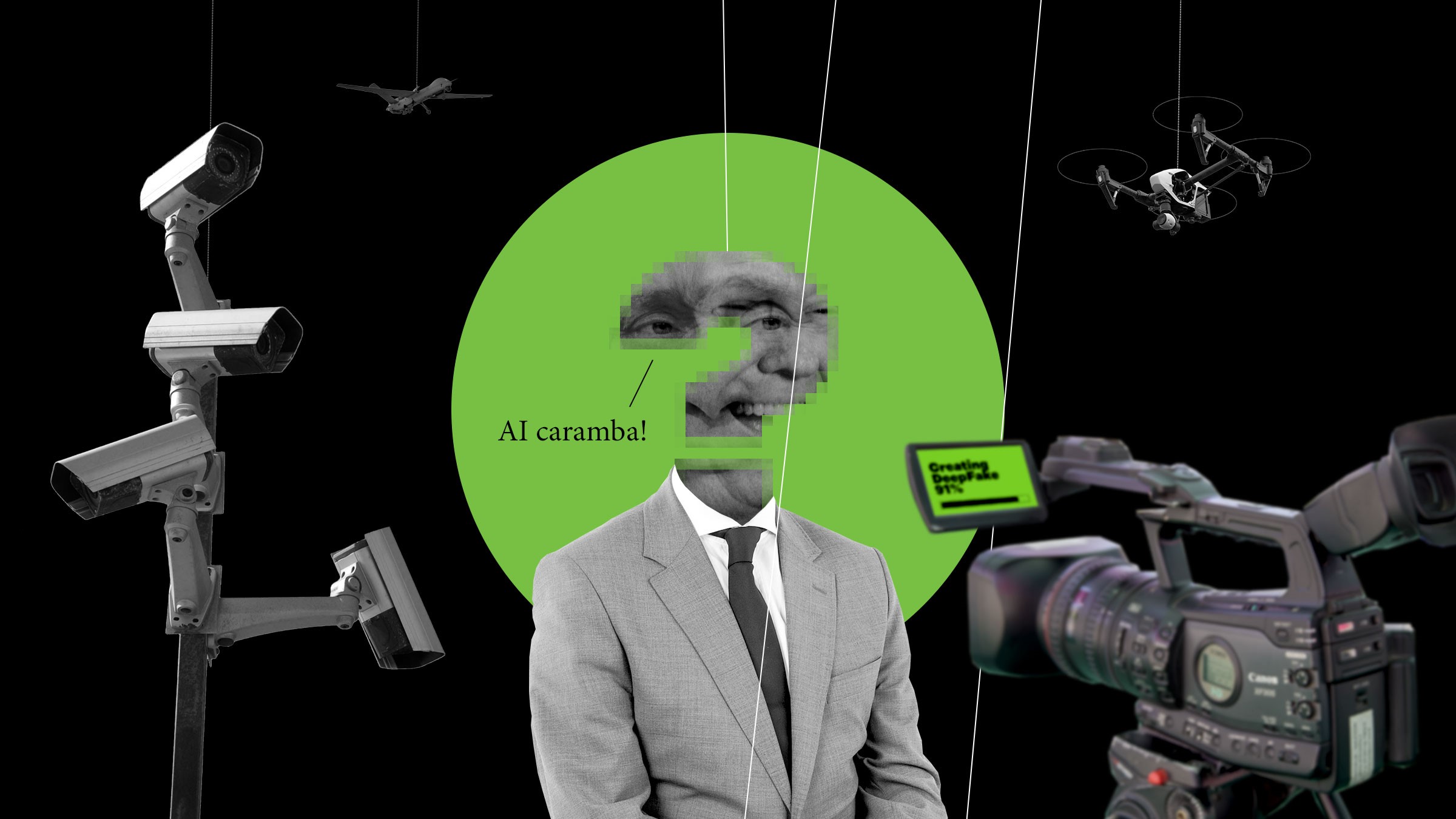

Editing images, audio and video has become so easy that one can no longer trust what is seen. Using artificial intelligence, it is relatively easy to add Nicolas Cage to all films, for example, or make Barack Obama say anything on video. Fake news videos are easily spread, purporting to be genuine. Google Duplex promises that artificial intelligence will call our hair stylist for us, but will we rather use it for handling socially difficult situations, as proposed by futures researcher Gerd Leonhard.

The use of artificial intelligence in systems supporting decision-making has led to the return of old prejudices. As the algorithms function based on existing information entities, they may easily replicate our old preconceptions by, for example, interpreting a nurse as being female and the doctor as male. Artificial intelligence also does not understand what we mean, but may aim to achieve its goal using methods that we sometimes find strange.

New technology may lead to strange end results or reinforce old values and beliefs.

The problem is that we do not know how the algorithm makes its decision or we are unable to understand it. This problem is being addressed, among others, by the AlgorithmWatch initiative, which highlights locations where algorithms are allowed to make decisions and also calls for the openness of the criteria used for decision-making.

Misuse and unintended consequences may also create new opportunities. For example, the automated responses from Gmail can be helpful for testing if one’s idea is viable. You can play with the oddities of learning algorithms and succeed with poorly made robots on Youtube. The downsides of development may also help us see other alternatives.

Energy-hungry algorithms

Digitisation is often presented as shiny and virtual. Things live in the cloud and data travels in arcs above the world. In reality, the digital infrastructure is in cables, switches and data centres. Maintaining this infrastructure consumes energy. Artificial intelligence applications also require a lot of energy, which is a challenge for self-driving cars, for example. However, one of the most rapidly growing consumers of energy is blockchain technology.

The current operational model of blockchains usually requires a lot of computing power in order to validate the chain and create new blocks. And high computing power requires a lot of electricity. The cryptocurrency Bitcoin, for example, which is based on blockchains, is estimated to consume the same amount of energy as Ireland and consumption is continuing to grow. Energy consumption can also drop if the operational model is changed.

Technological haunting

Technology can sometimes seem magical. Augmented and virtual reality change what we see, which may blur our perception of what is real. In addition, they create different types of realities, which cause us to not be sure what someone else is seeing or what we are seen as. For example, the Facebook funny effects were inadvertently activated in a Helsingin Sanomat live broadcast about the elections, but the reporters did not seem to know that they appeared as wizards or astronauts when they spoke.

Although we teach algorithms to see as we do, they make sense of the world in their own ways. A good example of this are images generated by neural networks, which can seem psychedelic or horrifying. MIT has even created a “nightmare machine”, which serial-produces horrifying images.

Smart devices and faults may turn a smart home into a haunted house. When algorithms control the lights and heating, the house seems like it is living its own life. Smart speakers have laughed in the middle of the night and they can also be given commands that are not audible to humans. When the invisible digital world is linked to the physical world in an increasing number of ways, to different devices and to objects and the internet, the consequences may seem more like the paranormal than progress. We often also do not know how much data smart devices collect and send to company servers.

The power afforded to the individual by new technologies

The dual use of technology, which refers to the use of technology for both civilian and military purposes, has always been a challenge. Nuclear power is a good example of this: we do not want to ban the energy production it provides, but we would like to prevent nuclear weapons from being created. Technology requires good governance and international treaties.

An additional challenge with new technologies is that they are relatively easy to obtain by individuals and small groups. For example, synthetic biology makes the design and production of synthetic pathogens possible. In order to minimise threats, the FBI co-operates with synthetic biology enthusiasts and encourages the community to engage in self-monitoring.

Quadcopters – small drones equipped with rotors – combined with facial recognition may be precision-guided weapons of the future. Quadcopters and drones are already presently used for military purposes, including anonymous attacks on a Russian base in Syria. Blockchain technology allows anonymous assassination markets, where people bet on when a person will die. The person guessing the closest wins the pot.

Therefore, it is useful to consider with new technology how it could be used for destruction or how criminals could benefit from it. This allows us to prepare for the more surprising intended uses and prevent them in advance, if necessary. This also allows us to discover tensions associated with the technology: for example, the open and diversified nature of blockchains enables new types of operation models without intermediaries, which also means that stand-alone entities cannot supervise the operations.

Tool: Misuse of technology

How can technology be misused and what would be the potential consequences?

Do the following.

- Collect weak signals about situations where people have used a new technology in a way other than that intended or where using the technology has had unexpected consequences.

- Supplement the collection by thinking of ways in which the new technology could be misused.

- What types of entities can be seen in the collection? What types of tensions do they describe?

Recommended

Have some more.